How to Batch SharePoint Online Operations in SPFx and Reduce Throttling

- Vincent P.

- Jul 29, 2025

- 3 min read

When processing large volumes of data in SharePoint Online (SPO), you may encounter throttling — typically seen as 429 Too Many Requests or 503 Service Unavailable.

This happens because SPO protects itself from overload by limiting the rate of incoming requests.

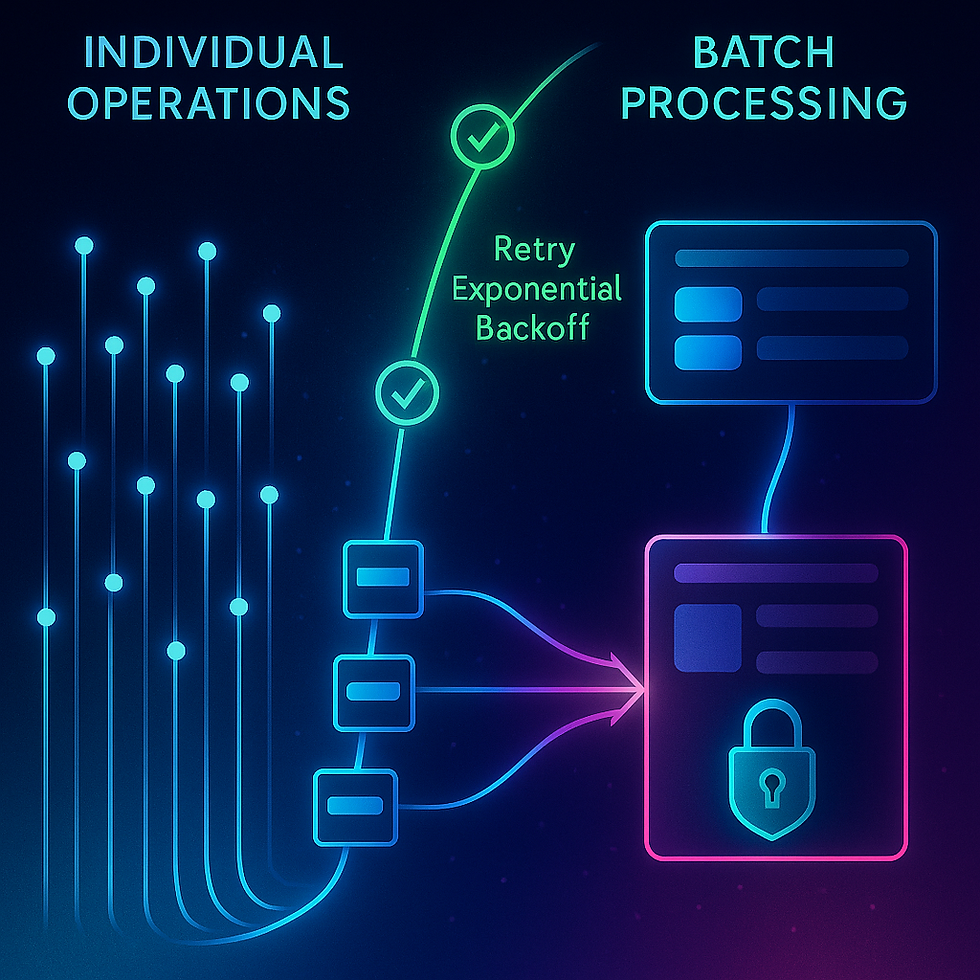

In this post, we’ll cover a robust batching pattern using the provided processInBatches function (leveraging the @pnp/sp libraries for SharePoint REST API operations) and accompanying utilities for:

batch SharePoint Online operations efficiently in SPFx

Creating items in bulk

Updating existing items

Deleting items efficiently

These tools will help you:

✅ Minimize throttling

✅ Retry failed operations automatically

✅ Provide progress feedback

✅ Keep payloads lean for performance

Batch SharePoint Online Operations in SPFx: Core batching logic

This is the heart of the batching strategy.

🔹 Purpose:

Splits your list of items into smaller chunks (chunkSize items each, default = 100)

Processes each chunk using SharePoint REST API batching

Handles throttling errors (429 / 503) with automatic retries and exponential backoff

Optionally reports progress for better UX and logging

📝 Function signature:

type BatchAction = (batchedList: any, chunk: any[]) => Promise<void> | void;

interface IProcessInBatchCore {

items: any[];

listName: string;

action: BatchAction;

onProgressChange?: (message: string) => void;

onProgressChangeIdentifier?: string;

chunkSize?: number;

maxRetries?: number;

}

export interface IProcessInBatch extends Omit<IProcessInBatchCore, 'action'> {}🔍 How it works:

Step 1: Validate items and listName.

Step 2: Split items into chunkSize arrays.

Step 3: For each chunk:

Process with a SharePoint batch request.

Retry on 429 or 503 errors using exponential backoff:

Abort and throw after maxRetries (default = 5 attempts).

📦 Implementation:

const processInBatches = async ({

items,

listName,

action,

onProgressChange,

onProgressChangeIdentifier = '',

chunkSize = 100,

maxRetries = 5 }: IProcessInBatchCore) =>

{

if (!items?.length || !listName) return;

const chunks = [];

for (let i = 0; i < items.length; i += chunkSize) {

chunks.push(items.slice(i, i + chunkSize));

}

let batchIndex = 1;

for (const chunk of chunks) {

let success = false;

let attempt = 0;

while (!success && attempt < maxRetries) {

try {

const list = _sp.web.lists.getByTitle(listName);

const [batchedBehavior, execute] = createBatch(list);

const batchedList = list.using(batchedBehavior);

await action(batchedList, chunk);

await execute();

success = true;

batchIndex++;

}

catch (error: any) {

attempt++;

if (error.status === 429 || error.status === 503) {

const delay = Math.pow(2, attempt) * 500;

await new Promise(resolve => setTimeout(resolve, delay));

} else {

throw error;

}

}

}

if (!success) {

throw new Error(`Batch ${batchIndex} failed after ${maxRetries} retries`);

}

}

};🔑 Key benefits:

Built-in throttling resilience.

Clear progress reporting (onProgressChange callback).

Flexible structure to support any list operation (action callback provided by caller).

Utility helpers built on processInBatches

These helpers simplify common list operations:

createItemsWithRetries

updateItemsWithRetries

deleteItemsWithRetries

Each wraps processInBatches with the correct action logic.

ƒ createItemsWithRetries

📦 Purpose:

Efficiently batch-create new list items.

🔧 Implementation:

export const createItemsWithRetries = async (payload: IProcessInBatch): Promise<void> =>

{

if (!payload?.items?.length) return;

await processInBatches({

...payload,

action: (batchedList, chunk) => {

for (const item of chunk) {

batchedList.items.add(item);

}

}

});

};🔨 Example:

await createItemsWithRetries({

listName: 'Projects',

items: [

{ Title: 'Project 1' },

{ Title: 'Project 2' }

],

chunkSize: 50,

onProgressChange: msg => console.log(msg)

});ƒ updateItemsWithRetries

📦 Purpose:

Batch update existing list items by ID.

🔧 Implementation:

export const updateItemsWithRetries = async (payload: IProcessInBatch): Promise<void> =>

{

if (!payload?.items?.length) return;

await processInBatches({

...payload,

action: (batchedList, chunk) => {

for (const item of chunk) {

const { ID, ...updates } = item;

batchedList.items.getById(ID).update(updates);

}

}

});

};🔨 Example:

await updateItemsWithRetries({

listName: 'Projects',

items: [

{ ID: 1, Title: 'Updated Project 1' },

{ ID: 2, Title: 'Updated Project 2' }

],

onProgressChange: msg => console.log(msg)

});ƒ deleteItemsWithRetries

📦 Purpose:

Batch delete (or recycle) list items by ID.

🔧 Implementation:

export const deleteItemsWithRetries = async (payload: IProcessInBatch): Promise<void> =>

{

if (!payload?.items?.length) return;

await processInBatches({

...payload,

action: (batchedList, chunk) => {

for (const id of chunk) {

batchedList.items.getById(id).recycle();

}

}

});

};🔨 Example:

await deleteItemsWithRetries({

listName: 'Projects',

items: [10, 12, 14],

chunkSize: 100,

onProgressChange: msg => console.log(msg)

});Best practices for effective batching

✅ Choose a sensible chunkSize:

Default 100 works well generally.

Lower (e.g., 50) for large payloads or slower networks.

Tune based on tenant throttling behavior.

✅ Minimize payloads:

Only send fields needed (Title, etc.).

Avoid excessive properties or unnecessary metadata.

✅ Handle progress updates cleanly:

Use onProgressChange to log progress or provide UI feedback (e.g., status indicators or loading spinners).

✅ Trust the built-in retry strategy:

Avoid additional retry wrappers unless absolutely needed.

Summary

The processInBatches pattern and its utility helpers (createItemsWithRetries, updateItemsWithRetries, deleteItemsWithRetries) provide an efficient, resilient approach for handling bulk operations in SharePoint Online:

🔹 Batch requests = fewer round-trips

🔹 Smart retry with exponential backoff = reduced throttling impact

🔹 Progress feedback = better UX and debugging

You can drop these helpers directly into your SPFx project and immediately improve the performance and reliability of bulk operations.

Comments